A weirdly broken AI

The other day someone told me about a program that will generate scenes to match a text description. I’m always excited to test out algorithms like this because the task of “draw anything a human asks for” is so hard that even state-of-the-art results are hilariously bad.

I tried a few test prompts.

“Nicest alien wants to say hi”

“The end of the world”

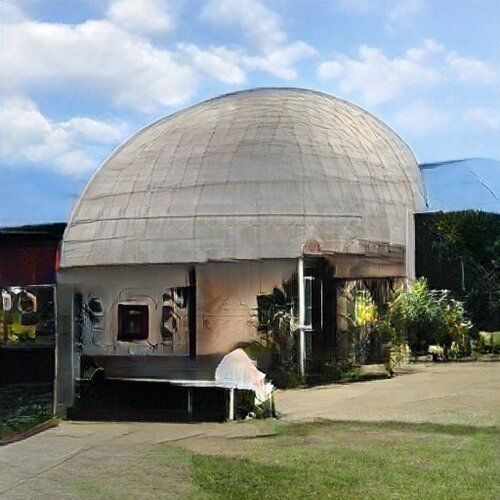

“A planetarium full of marbles”

Depending on what you ask for, it can seem for a while like maybe the neural net is doing well. But then you get to results like this:

“Horse riding a bicycle”

“Tyrannosaurus eating pizza”

Why does it sometimes generate something that’s a halfway recognizable attempt at completely the wrong thing?

I think I figured it out. Look at this series of images.

Triceratops, Tree frog, Hourglass, Fireplace… It’s matching every prompt with a vaguely similar word.

And because I’ve played a lot with image-generating neural nets, I even recognized the categories: they’re all from Fei-Fei Li’s famous ImageNet project. So if a phrase isn’t already an ImageNet category (like “horse on a bicycle”), this program looks for its closest match - in this case, it seems to have gone for “house finch” so it’s going for similarity in spelling rather than in meaning. “The end of the world” turned into “hen of the woods”, a type of large ruffled mushroom. I’m not sure why “tyrannosaurus eating a pizza” seems to have turned into “measuring cup”. The “nicest alien” is slightly easier to explain, since there are a LOT of dog categories in ImageNet so chances are decent a given phrase will match to a dog.

Here’s an interesting one: “God”

There’s no “God” category in ImageNet, but there is one for “hog”.

As far as I can tell, this demo’s not being used anywhere other than this one weird demo site, so there’s no harm in it being blissfully, weirdly wrong about stuff. But it does give me a small satisfaction to think that I may have figured out HOW it’s being so vividly wrong. Still puzzling about that tyrannosaurus rex, though.

AI Weirdness supporters get bonus content: I’ve collected a few more examples of prompts + results, some of which I find really baffling. Or become a free subscriber to get new AI Weirdness posts right in your inbox!

You can explore some of the ImageNet categories (and even mix them together, or compute the opposite of guacamole) using Artbreeder.com (the “general” image type). If you figure out what some of these mystery phrases mapped to, please tell me in the comments!

UPDATE: Vincent Tjeng has a detailed explanation - basically it’s matching your input phrase to whichever ImageNet category it can be turned into with the fewest number of edits.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore