Depth of field fails

You might think of AI as a thing that only runs on powerful cloud computing servers, but these days you’ll also find it in your phone. Unlike the AI in science fiction, the AI in your phone is limited to just one task at a time - predicting the next word in your sentence, or transcribing your voicemails, or recognizing your speech.

One of those tasks? To make your smartphone camera pretend to have a much better lens than it actually does.

In professional portraits, taken with a fancy camera, you may notice that the background is artfully blurred - that’s because they use a camera lens with a very narrow depth of focus, so that just the subject’s face - sometimes just their eye - is in focus. These lenses can get expensive, with a lot of heavy precision glass, and they also make it tricky to focus on just the right spot, with just a tiny margin of error turning a face into an out-of-focus disappointment.

So, smartphones use AI to cheat.

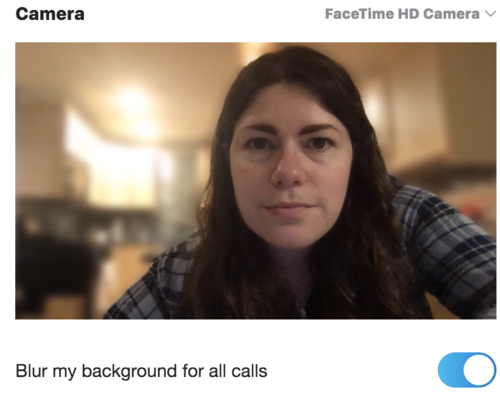

With its knowledge of how people and objects are generally shaped, a a machine learning algorithm does its best to figure out where the human is, puts the human face in focus, and then artfully blurs the background. Depending on the phone type, it might be called something like portrait mode, live focus, or selfie focus. When it works, it looks like this:

Notice how my face and arms are equally in focus, but the background is very blurry - without AI’s sleight-of-hand, the background would be sharper, or else my arms would be noticeably out of focus.

For this post I used Skype’s “Blur my background for all calls” mode, and used my computer’s webcam for a camera. This way I could be sure it was relying 100% on AI analysis of a 2D image (as I’ll mention later, this works much better on smartphones that have dual cameras and can use them to get extra depth info).

The Skype blurry background AI is hyper-focused on human faces and bodies to the exclusion of pretty much all else, which can lead to it accidentally censoring book covers, orchids, and the faces of cats.

It’s aggressively blurring things probably because from its designers’ point of view, it’s better to mistakenly blur the foreground than to neglect to blur something the caller wanted hidden. And it’s making lots of mistakes because it’s depending on that single 2D webcam image, with no other way to get depth information. That also means I don’t need to show it 3D objects for it to have a go at blurring the background.

I used the extremely high-tech method of holding up a copy of Sister Wendy’s History of Painting to my webcam, and began to explore just how abstract a painting could get before the AI could no longer outline the human. The answer? Quite abstract.

Ancient Egyptian paintings? no problem.

Even “Death and Fire” by Paul Klee is handled passably. (A photorealistic skeleton, on the other hand, flickers wildly between in-focus and background, as if the AI can’t decide if the skeleton is really the one making the Skype call).

This plush giraffe is recognized as the entity making this Skype call, but the AI has less experience with giraffe callers and so blurs out its ears and horns. It includes a tiny wedge of background lamp, though, as if it thinks the giraffe might be wearing a tiny glowing hat.

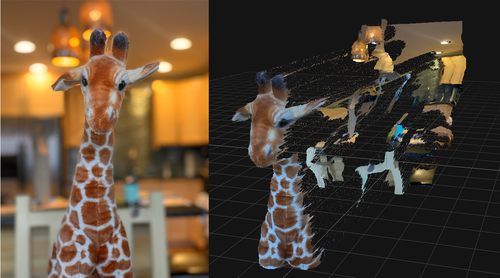

Compare this to how my iPhone XS reacted to a similar plush giraffe image.

To blur the background, the iPhone XS doesn’t have to rely on its past knowledge of plush giraffes - it has two cameras at slightly different positions and fields of view, and from them it can figure out roughly where the objects are situated. (See this video by Marques Brownlee - written up here - for more explanation.) I used Focos to look at the depth map my iPhone made, and it’s evident that it works best for objects within a certain distance of the camera - notice how much less depth the background has, and how wobbly the chair back is. As an optical engineer, I’m really interested in the optics of this two-lens method, so I might revisit it in another blog post.

AI Weirdness supporters get bonus content: The Skype background blur AI is frustrated by skeletons. (Or become a free subscriber to get new AI Weirdness posts in your inbox!)

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s