The world through the eyes of a neural net

What would happen if I fed a video to an AI that turns images into blobs of labeled objects, and then fed THAT video to another AI that attempts to reconstruct the original picture from those labeled blobs?

I used runwayml’s new chaining feature to tie two algorithms together. The first is DeepLab, a neural net that detects and outlines objects in an image. In other words, it’s a segmentation algorithm.

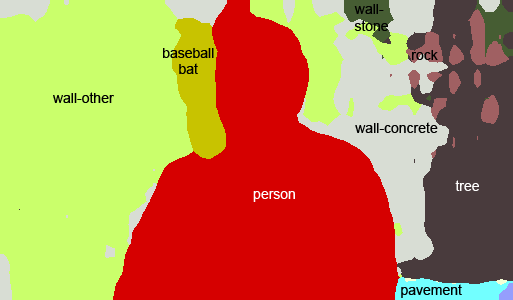

So DeepLab segments this frame of the Last Jedi:

into this schematic (I added the labels so you can see the categories).

You will notice that DeepLab correctly detected Luke as a person (in fact we will see later that DeepLab is often overgenerous in its person detection), but that without a category for “lightsaber” it has decided to go with “baseball bat”.

The second algorithm I used is NVIDIA’s SPADE, which I’ve talked about before. Starting from a segmented image like the one above, SPADE tries to paint in all the missing detail, including lighting and so forth. Here’s its reconstruction of the original movie frame:

It has decided to go with an aluminum bat and, perhaps sensibly, has decided to paint the “human” blob with something resembling a baseball jersey and cap. That same colorfest shirt actually shows up fairly frequently in SPADE’s paintings.

So, feeding each frame through DeepLab and then SPADE in this way, we arrive at the following reconstruction of the Crait fight scene from The Last Jedi. (The background flashes a lot b/c the AIs can’t make up their mind about what the salt planet’s made of - so be aware if you’re sensitive to that sort of thing).

I’d like to highlight a couple of my favorite frames.

DeepLab is not sure how to label the salt flat they’re fighting on, so sometimes it goes with pavement or sand. Here it has gone with snow.

SPADE, therefore, faced with two person-blobs on snow, decides to put the people in snowsuits. Also there is a surfboard.

For this frame, DeepLab makes a couple of choices that make life difficult for SPADE.

DeepLab, in its eagerness to see humans everywhere, has decided the at-ats in the background are people. It also decides that part of Kylo is a fire hydrant.

SPADE has to just work with it. The person-blobs in the background become legs in snowsuits. It does its best attempt at a fire hydrant floating in a sea of “person” with flecks of car.

It will be amazing to see what these kinds of reconstructions end up looking like as algorithms like DeepLab and SPADE get better and better at their jobs. Could one segment a scene from Pride and Prejudice and replace all the “human” labels with “bear”? Or “refrigerator”?

Experiment with runwayml here!

You can also check out a cool variation on this where Jonathan Fly gives SPADE not a DeepLab segmentation but frames from cartoons or 8-bit video games.

AI Weirdness supporters get bonus material: more Star Wars reconstruction attempts (did you catch the teddy bears in The Last Jedi?) and an epic Inception fail. Or become a free subscriber to get new AI Weirdness posts in your inbox!