Apparently I am a robot

As AI-generated text is getting better, it's getting easier to pass it off as human-written.

That's not to say it's as good as human-written. Its goal is to sound correct rather than be correct, so it has a well-known tendency to confidently make stuff up. But there's a market for mediocre writing, whether you're trying to lure traffic to a blog or pass an assignment.

Wouldn't it be nice if there was a way to detect AI-generated text? In Nov 2019, along with the release of GPT-2, OpenAI also released a GPT-2 output detector, and there's an online demo hosted at Hugging Face. (As well as a browser plugin called GPTrueOrFalse.) Although it's trained specifically to detect GPT-2's generated text, I've noticed that it's also fairly good at detecting the generated text from completely different text generators, including those that are much more or less advanced.

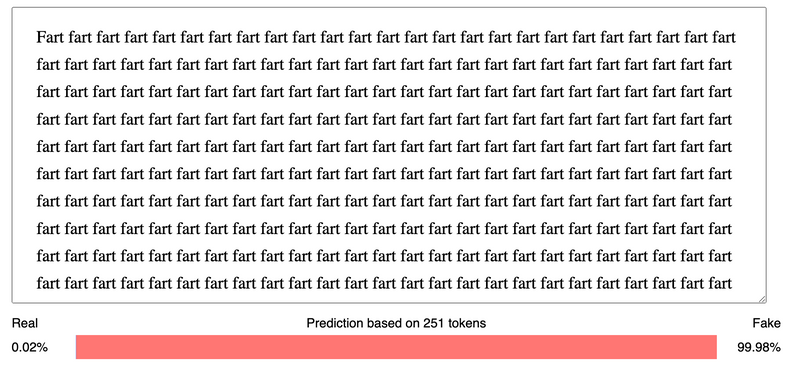

So, I tried it on an excerpt from my book on AI:

92.99% fake? Apparently I am a robot.

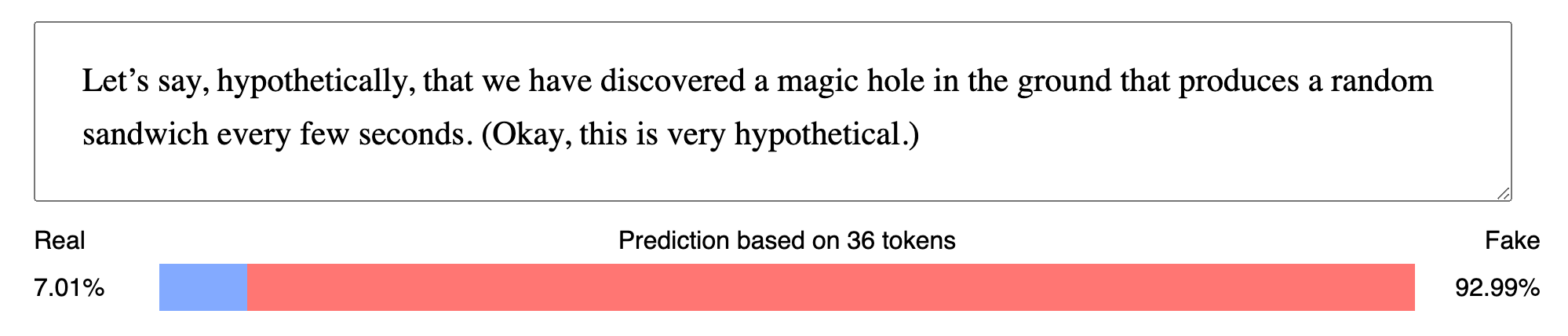

But wait! The preamble to the GPT-2 output detector demo mentions that the results start to become accurate after 50 tokens, and this was only 36 tokens. I added a couple more sentences and tried again.

So does this mean I'm really only 50% a robot? Or that robots do 50% of my work?

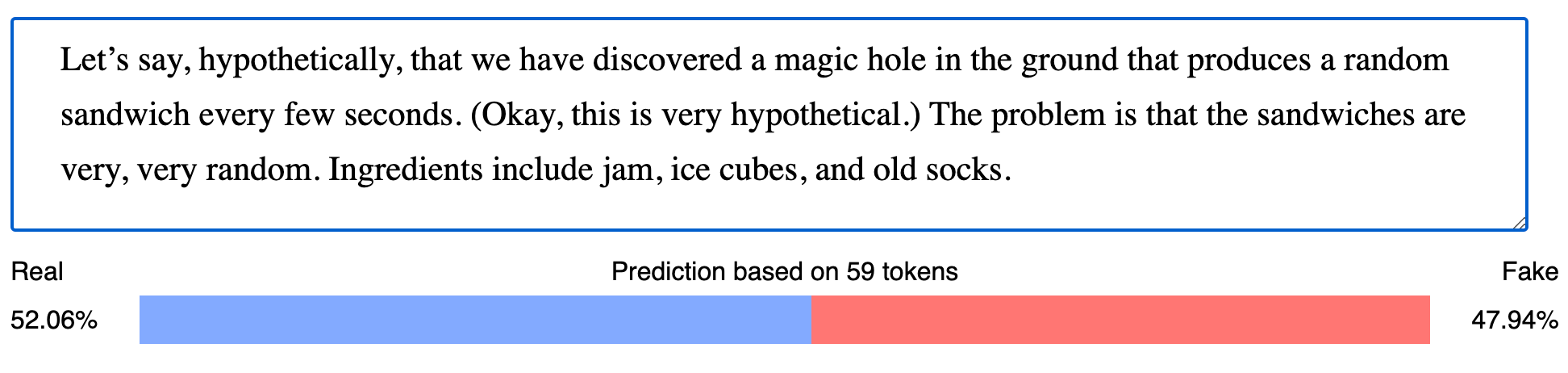

I added lots more text and it's back to nearly 100% robot.

In fact, even when I maxed out the detector's length at 510 tokens (just under 500 words), it was still rating me as about 8% human.

Up till this point I thought the GPT-2 detector worked pretty well - as long as the text sample was long enough, it matched what I knew or guessed about the text's origin. And it doesn't think every excerpt from my book is AI-generated. But the fact that it insisted even one excerpt is not by a human means that it's useless for detecting AI-generated text.

The GPT-2 detector is especially useless in cases like detecting cheating, where being deemed a robot could carry huge penalties. I've even suggested it for this purpose in the past, and have completely changed my mind. The bloggers responsible have been sacked.

Bonus post: I get ChatGPT to rate another neural network's recipes. It is ... generous.