From Cat to Kitchen: a cautionary tale

I’m not sure what was more unsettling: my recent failed attempt to train a neural net called StyleGAN2 to generate screenshots from The Great British Bakeoff, or my successful attempt to train StyleGAN2 to generate pictures of my cat. The problem with the cat pictures wasn’t that they were terrible; the end result looked a lot like the few thousand virtually identical images I fed it. The problem was that, to save training time, I had started with a version of StyleGAN2 that had been trained on human faces and there was an, um, intermediate stage.

Whichever of those two options you may think was worse, Twitter user @asjmcguire helpfully suggested a way to make things even more awful: what would happen if I trained a Great British Bakeoff model with the images of my cat as a starting point? Would we get furry cat-people in a nightmarish kitchen?

Let’s find out. I used runwayml to open my cat-generating model, and then I gave it about 50,000 frames from the Great British Bakeoff, and started the training process.

Step 1 looks like cats, because that was the starting point. These are all the neural net’s attempts to replicate frames from the same 2-minute video of my cat. Most of them aren’t too bad. Note that they’re all very similar to each other, since the training data didn’t have very much variety.

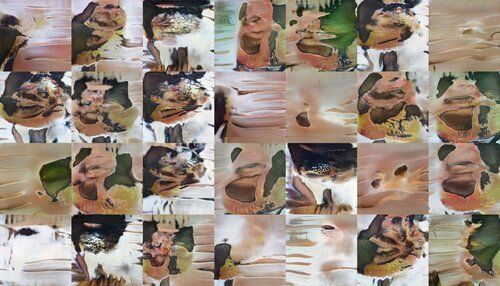

By step 340, things are definitely happening. It doesn’t entirely look like the cats are being transformed into people - it looks more like they’re being deconstructed. Rather than cats turning into people in kitchens, it looks more as if the cats are trying to become the entire kitchen. This may not go well.

By step 690, nothing is recognizable any more. And if you’re used to training GANs, you may be starting to get nervous at this point, as the images start to separate into uniform types and everything starts to get stripey. This can be a sign that the GAN is struggling, and that its struggles will lead to the dreaded condition called mode collapse. If this progresses, everything can dissolve into chaos or even apparently catch fire.

So obviously let’s see what happens next.

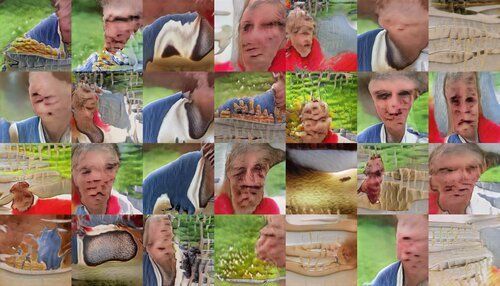

At iteration 1150, the stripes have turned into ripples in spacetime. The color scheme is looking a bit more like the GBBO. One image has something that might be an attempt at bread, except it is forking horizontally into floating strands. Humanoids with aggressive five-o-clock shadows have started to emerge. Are the images picking up a bit more variety?

I stopped at iteration 3000 where we have this. It’s… well, it’s improved.

But it’s still a much much worse job than the earlier Great British Bakeoff GAN, trained on the same dataset but from a different starting point. Perhaps in several thousand more iterations it will start to look as good - okay, as “good” - as my earlier attempt. Maybe - and I’m just speculating - starting the finetuning process from a model that already is producing a lot of diversity makes the finetuning go better. Maybe for even better results, I should not be starting with a StyleGAN2 that produces human faces, but with one that does a wider variety of objects.

Let’s take a good long horrible look at one of these images.

One thing you’ll notice is how darn stripey everything is when the GAN is struggling. Basically the neural net runs out of new ideas and keeps returning to slight variations on stripes that kinda work. Stripes are its go-to because so much of its underlying math is based on combinations of stripe patterns of different frequency. To get smooth features and recognizable objects it has to finely balance the patterns so they build, say, an eye, in one spot and suppress eyes everywhere else. When it’s not so good at balancing them you get… rows of extra mouths. Yay.

Made with runwayml - you can do this too! Got a cat? A bunch of Easter eggs? A lemon? It’s now seriously easy to generate your own horrible abominations. The simpler and more consistent your subject, the more recognizable your results will be.

AI Weirdness supporters get bonus content: there were far too many amazing pictures to fit in this post. Or become a free subscriber to get new AI Weirdness posts in your inbox!

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore (has signed copies!)